COM Express SBC Utilizes Intel Iris Xe Graphics for Deep Learning AI

In 1972, TOMRA, a Norweigan firm, pioneered the first reverse vending machine (RVM) which incentivises people to recycle by providing a reward in exchange for a plastic bottle, glass bottle, or aluminum can. After the item is inserted, it is crushed, sorted, and scanned. Once approved, it is stored in the appropriate container and the user is rewarded with either cash or coupons.

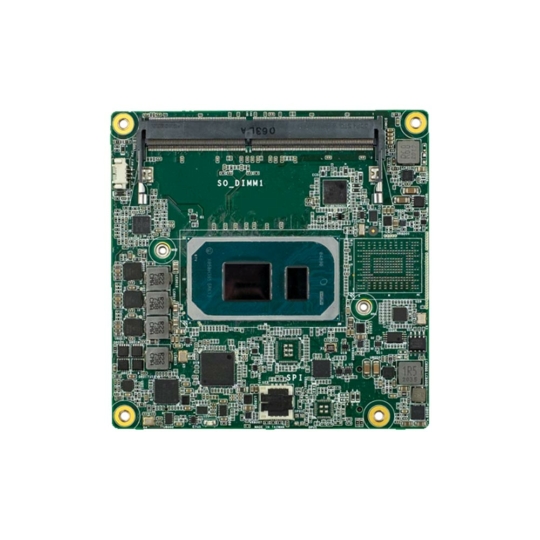

The latest COM Express board by DFI, the TGU968, has been integrated into an advanced reverse vending machine which allows for a much more efficient recycling process. By utilising the boards latest Intel Core CPU & Iris Xe GPU technology, the recycling speed is increased up to five times faster than traditional single-feed solutions, drastically cutting down on the time it takes to complete the process. This helps to reduce overall waste, increase efficiency, and improve the effectiveness of the recycling process. The industry of reverse vending machines is changing rapidly, and selecting a COM Express solution is essential to simplifying the system architecture and making sure their technology can be updated and improved in the future.

The 11th Generation ‘Tiger Lake’ Intel Core i5 and i7 CPUs onboard the TGU968 compact COM express system-on-modules feature onboard Iris Xe graphics engines, which when combined, enable faster execution of machine vision and AI deep learning tasks, significantly improving the efficiency and accuracy of reverse vending machines. The Intel Xe GPU is an integrated graphics processing unit that is placed on the same die as the processor, meaning it is built directly into the CPU. It shares the same memory as the processor, instead of utilising a dedicated DDR memory chip. This helps to reduce the size and power requirements of the system, allowing for more efficient use of resources. Additionally, it provides a cost-effective solution which has been a traditional barrier to entry with AI applications.

“The combination of Intel Xe graphics and the OpenVINO inferencing engine has enabled us to achieve performance levels that have previously only been seen on much more expensive AI computing platforms. This cutting-edge technology has the potential to open up AI to wider audiences and give software developers and ML professionals the tools they need to create applications that can make the world a better place.” Andrew Whitehouse, CEO at Things Embedded.

Intel Core i5 & i7 Tiger Lake Embedded Systems with Integrated Iris Xe Graphics

DFI TGU968 COM Module

Enabling Low Cost Edge AI with OpenVINO and Intel Iris Xe GPUs

OpenVINO and Intel Iris Xe GPUs can enable low-cost edge AI by providing optimized deep learning performance on low-power and cost-effective embedded edge AI computers.

Firstly, OpenVINO allows developers to optimize deep learning models for deployment on a range of embedded systems, including low-power CPUs, GPUs, and FPGAs. This means that even low-cost devices can support high-performance AI applications, making it possible to bring AI to the edge without requiring expensive hardware.

Secondly, Intel Iris Xe GPUs offer high-performance and energy-efficient computing, which is ideal for running AI workloads on edge computers. The Iris Xe GPU architecture is designed to provide high-performance graphics and compute capabilities with a low power budget, making it a cost-effective solution for edge AI applications.

When combined, OpenVINO and Intel Iris Xe GPUs can help developers create cost-effective AI solutions that can run on edge devices with limited computational resources.

Benefits of Intel Iris Xe GPUs

Intel’s latest Iris Xe GPU series are engineered to fulfil the needs of deep learning AI workloads at the edge. Compared with previous integrated graphics processors, it delivers a higher performance, faster speeds, and an easier design process for a wide range of industrial applications. Featuring what is known as an ‘AI Matrix Engine’, the Intel Xe GPUs are designed with vector and arithmetic logic units. These are collectively known as vector and matrix engines, which are optimized for efficient use with the OpenVINO inferencing engine.

This low-power AI matrix engine is ideal for handling large and complex files, and has improved encoding performance. When combined with OpenVINO, the Xe GPUs offer better cost-efficiency and high ML performance than other AI computing platforms. Also supporting a power-saving design, Iris Xe GPUs further benefit edge deployments by reduced operating costs, even when dealing with multiple tasks or AI operations.

For more demanding workloads, such as training large deep learning models, specialized hardware such as GPUs designed specifically for deep learning tasks may be required. Nonetheless, Iris Xe GPUs can be a great choice for running inference tasks, which are the applications that use the trained deep learning models to make predictions or decisions on new data.

Benefits of Intel OpenVINO

In recent years, Deep Neural Networks (DNNs) have significantly improved the precision of computer vision techniques across multiple industries. OpenVINO is a cross-platform deep learning toolkit which makes it possible to optimize DNNs for inference in a fast and effective way, by incorporating it’s various tools into the process. OpenVINO utilizes the most cutting-edge Artificial Neural Network models, including convolutional, recurrent, and attention-based varieties.

Intel’s OpenVINO platform was aimed at accelerating deep learning AI workloads and speeding up the developers time to market by utilising a library of predetermined functions as well as pre-optimised kernels. Additional computer vision tools such as OpenCV, OpenCL kernels, and more are included in the OpenVINO toolkit. Once the application is defined it is easily deployed to edge devices at scale by utilising the Viso Suite.

Want To Discuss The Benefits of OpenVINO For Edge AI?

Tell us about your application and a member of our team will get right back to you.